Think Tank: Automated AI Expert Group

Introduction

We have an old saying in Ukrainian, "One head is good, but two are better." The importance of collaboration cannot be overemphasized. In most, if not all, the fields of human activities, groups of people do better than individuals alone. Take sport, for instance. No matter how biologically talented and self-motivated an athlete is, to achieve anything meaningful, they need a team. The same goes for science, engineering, manufacturing, etc. Honestly, I cannot think of anything that collaboration would not improve.

However, it is not only about having more than one person. It is about the diversity of skills and experience. Indeed, a diversity of points of view or mental models always yields a more complete picture of reality, more options to choose from, and more solutions to a problem.

Now that we have access to virtually any expert at our disposal 24/7 via LLMs, applying the collaborative principles to LLM interactions only makes sense. If you are unfamiliar with "Personas" as it applies to LLM prompting, I recommend you read Mastering Effective Prompting Part 1. In short, "A persona, in the context of interacting with an AI like ChatGPT, is a predefined character or role we assign to the chatbot." Using this simple concept, we can create experts, and using a little bit of code, we can make those experts talk to each other.

For impatient

If you want to dive into the code first, you can find my Proof of Concept implementation of Think Tank here. Since it is a Jupyter notebook, you can see the output that I have generated clone it to your Google Colab, and play with the code without a need to set anything up locally. Happy hacking!

Concept: The "Think Tank"

Motivation

I am using LLM as the primary driver of my product, and this product solves very complex problems. Much research was in progress when I started. However, there was little understanding of the vast potential that lay in combining "mere" LLMs with traditional programming for tackling multi-step, exploratory problems.

I learned about personas fairly early on, and that made me think, what if I create a bunch of different personas replicating professions involved in the domain I am working on? So, I created a virtual team. The result was astounding. "They" were able to plan, execute, and validate the outcome using the tools I have provided "them." Now, this approach is used by projects like ChatDev or Dev-GPT. Both of those tools make use of a virtual tech company (ChatDev) or virtual dev team (Dev-GPT).

Now, think about this: whenever you ask something of your chatbot, you are a) talking to one expert, and b) you have to craft that persona. What if you did not have to do preliminary work whenever you want to ask a question that might benefit from the diversity of opinions? Just ask the question, and personas will be identified automatically and express their opinions.

Why "Think Tank"

I was inspired to go toward multi-agent, collaborative frameworks by the "Three Amigos" technique. "Three Amigos" aims to bring Business, Development, and Testing experts to examine work before implementing it. I used this technique professionally, and it does miracles. We uncovered "hidden" requirements, architectural issues, and possible regression caused by introducing the new functionality. Having a similar discussion between, say, only the developers will not provide such a breadth of perspective.

From ChatGPT:

Think tanks have long been a cornerstone in policy-making, research, and innovation across various fields. They bring together a diverse group of experts who collaboratively tackle complex issues, drawing from a wealth of knowledge and differing perspectives to formulate well-rounded solutions. The essence of a think tank lies in its collaborative nature and the synergy that arises from the interplay of various expertise.

I toyed with various concepts, but "Think Tank" is a generic enough concept that captures the fact that there is a group of diverse experts without being bound to a specific domain or posing a limit on the number of experts involved. As you will see in the following sections, this framework can be applied to any domain and used to solve generic problems or as a "sub-team" of a larger, virtual organization.

The Virtual Expert Group

Let me give you a refresher on what persona definition looks like (generated by GPT-4):

You are a Work-Life Balance Coach, helping individuals balance their professional and personal lives.

What happens "mechanically," you add this sentence above as part of your prompt to the LLM. Some models, like the GPT family, have a part of its context called "system message." So, I would put all the important instructions into the system message.

In Mastering Effective Prompting Part 3 I have provided insights on how chat can be implemented. In case you missed it, very simply, you would just record all the back-and-forth interactions into an evergrowing prompt. Each time you as a user send a message, this message and the response become part of future prompts. Please note that this simplification obfuscates all the optimizations and nuances of what actually going on. So, the ongoing prompt with the GPT model would look like this:

System Message: ...

User: ...

AI: ...

User: ..

AI: ...

...

But what if you have multiple AI Agents? What if there are only AI Agents? The fundamental structure is still the same, besides the fact that from the perspective of the agent, the other agents are "User".

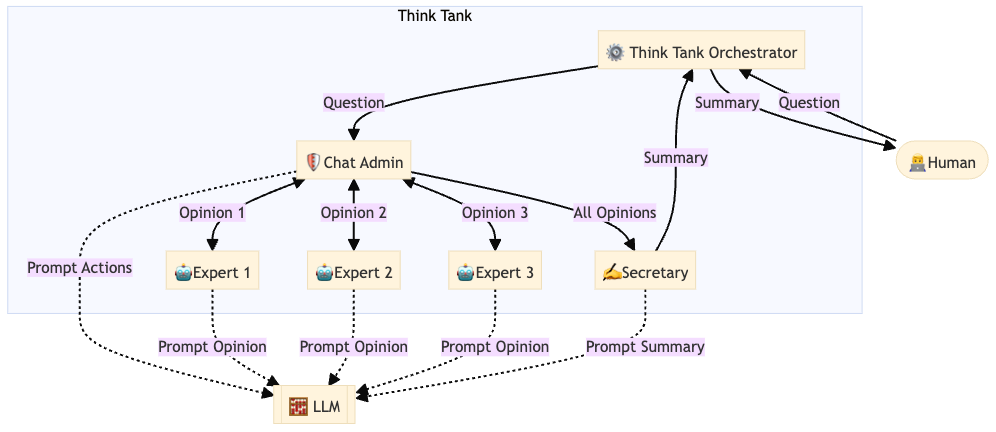

Below is a flowchart which explains how this multi-agent chat might be visualized.

Let me walk you through this process:

- A Human user asks a question. Think Tank directs this question to the Chat Admin

- Chat Admin queries all the experts for their opinions

- The Chat Admin asks the Secretary to write a summary

- Think Tank returns this summary to the user. Possibly with the full transcript of the conversation.

There are plenty of nuances and options in the implementation. For instance:

- It would make sense to further ask the experts to discuss the problem at hand and provide more polished opinions.

- Having or not having an explicit role of a Secretary is also optional.

- Add an explicit role of critic to further prompt refinement of the ideas expressed by the experts.

- Etc.

The goal of the diagram above is to help you visualize what this process would look like, not to create the best architecture for this framework.

Automated Persona Creation

One pain point that I have touched on at the beginning of this article is that creating personas is manual work. Whereas it might be desirable in some cases to fine-tune the persona(s) for the task at hand, in the case of Think Tank as a generic framework, it does not. Thankfully, automating the identification and definition of experts in virtually any domain out of just one question is trivial nowadays.

All we need is a) a prompt with b) a few tricks up our sleeve that we have learned from all those "Mastering Effective Prompting Part N" articles, and c) an LLM to do the inference for us. Here is an example of a prompt:

I need to define personas for a task. Those personas should describe a diverse list of experts in the domain of the question.

You will be given a question and you will return me {number_of_personas} distinct personas that would be the best experts in the domain you identify for the question. Define exactly {number_of_personas} personas.

You will return your response as a JSON document and nothing else.

For example:

User: I want to write an article about the application of LLMs in backend software engineering

AI:

{

"Domain": "Computer Science",

"Personas": {

"Software Engineer": "You are a backend software engineer.",

"Machine Learning Scientist": "You are a Machine Learning Scientist with expertise in Large Language Models and Natural Language Processing"

"Editor": "You are an editor of a Computer Science publication",

...

}

}

User: {question}

Let's look at this prompt. Here, we use a few techniques:

- We use structural data extraction, as we are asking the model to return us a JSON.

- We use one shot prompt. I find that with GPT models, one shot is usually enough, and compared to 0 shot, one shot yields much more consistent results.

- You can see

{number_of_personas}, which is a parameter we use for this prompt. I wanted to keep it flexible and not hardcode the number of personas.

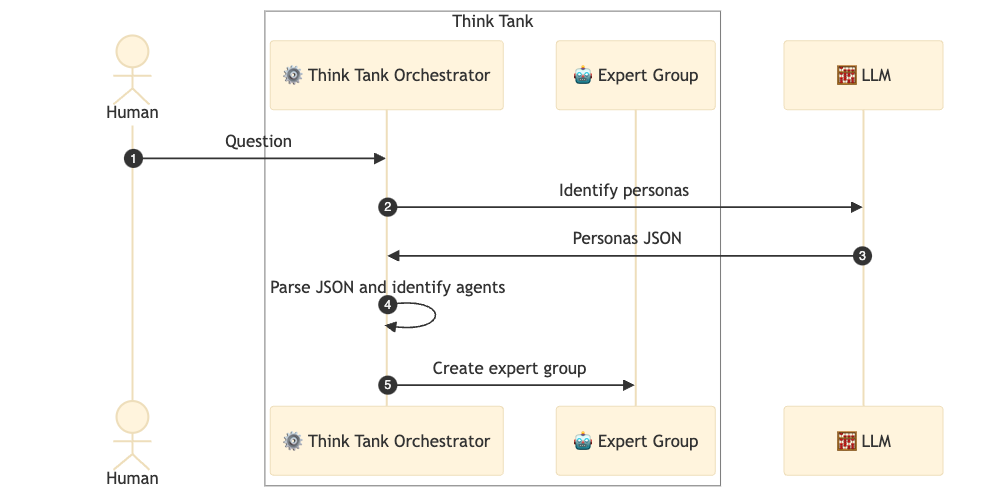

The reason we want a JSON back is straightforward: we can parse it programmatically and use it to form our expert group. Here is a sequence diagram explaining how this JSON will be created and used:

Let's walk through the sequence diagram above:

- As in the previous part, Human asks a question.

- Think Tank sends the prompt you saw above with the

{question}from the Human to a LLM. - LLM responds with the JSON containing the personas definition.

- Think Tank parses the JSON and identifies the experts needed to answer this specific question.

- Think Tank proceeds to create the AI Agents that would form the Expert Group.

- The rest is the same as described in the previous chapter.

Implementation using Autogen Library

I have implemented "Think Tank" using Autogen, you can find the code and use it yourself here. If you want to play with the code, just press "Open in Colab", and Google will provide you with hardware to run your code or you can connect it to your Jupyter server.

About Autogen

Autogen is a library that powered a few Research Papers out of Microsoft. It is open-source and very powerful. It is also very new and unpolished, so using it is not as straightforward as I would want.

Here is how the authors describe it:

AutoGen is a framework that enables the development of LLM applications using multiple agents that can converse with each other to solve tasks. AutoGen agents are customizable, conversable, and seamlessly allow human participation. They can operate in various modes that employ combinations of LLMs, human inputs, and tools.

This library popped into my radar around a month ago, and from looking at the examples of usage it became apparent to me that a) this library is super powerful, b) I can implement the idea of "Think Tank" in a few dozen lines of code.

Here is an example of how easy it is to create an AI Agent with Autogen:

import autogen

assistant = autogen.AssistantAgent(

name="assistant",

system_message="You are a helpful assistant."

)

user_proxy = autogen.UserProxyAgent(

name="user_proxy",

human_input_mode="NEVER"

)

user_proxy.initiate_chat(assistant, problem="What is square root of nine?")

As mentioned above, if you want to see the library in action, explore the examples of usage notebooks.

Recommendations

Whatever your level of familiarity with the application of GenAI, I would encourage everyone to look through the examples. This will help in understanding what is already possible and how easy it is.

If this is the first time you see a code written against LLM, I would recommend playing with the OpenAI's API, first. I think it would be beneficial to first understand the basics, since Autogen, hides LLM interactions completely.

Applications and Implications

I wanted to share some ideas where this framework would be useful. Outside of just obvious advanced chat, where a user wants to dive deep into some particularly complex problem, here are a few more areas in which I think "Think Tank" would be useful.

Marketing. Think about this: you are a marketing specialist, and you know all there is about marketing but not about all the domains you are raising attention to. If you are building a marketing campaign for, say, agricultural equipment, chances are you are no expert in that. I am not talking about marketing specialists chatting directly with the "Think Tank," but rather a software system that marketers use to create campaigns. Those systems automatically assist with advice and materials that are the result of Think Tank's deliberations.

Software. Most people think about software engineering mostly as writing code and deploying software. But we (software engineers especially) often forget to ask "What?" are we building, focusing on "How?" to build it. We often lack domain experts and build things that we think would be useful, without asking people who will be using it if that makes sense. We are already stepping into a new era of software engineering. One can even call it the "Software V2" era (this is the subject of my next article). With tools like ChatDev, imagine, instead of the tool just going and executing whatever you told it, it actually helps you understand and polish your requirements before generating something.

Personal Assistant. I am not the only one who thinks that personal assistants of the near future will be actually useful. With the advancement of AI, personal assistants can gain a lot of capabilities that they can learn without being programmed or trained specifically for. Whenever such an assistant would step into a new domain, it might make sense to "consult" with domain experts.

Anything requiring deliberate problem-solving would potentially benefit from the Think Tank approach. Think Tank can become the central point of any system that is tasked to solve problems independently. Forming a group of experts that not only discuss but execute tasks. Like with ChatDev, Think Tank can form the necessary team to produce your software without explicitly specifying needed roles.

I believe that this notion of "Inner Monologue" is what enables the true power of LLM-based AI Agents. What I call "Inner Monologue" is the ability of a system to speculate over the problem it solves in order to solve it. Like when a virtual group of experts are talking between themselves and then the Secretary provides us the summary. In this scenario, the question is the Input, the summary is the output, and the discussion that experts and the Secretary had is the "Inner Monologue" of the system. Making this monologue of diverse personas will add breadth to it and (not so) theoretically will elevate its efficiency.

Conclusion

The "Think Tank" framework heralds a collaborative approach within the Generative AI domain, enriching the problem-solving process through the synergy of diverse virtual experts. Here are the key takeaways:

- Collaborative Deliberation: Orchestrating multiple personas to emulate a human-like brainstorming session, enhancing problem-solving with a nuanced "Inner Monologue."

- Automatic Expert Deployment: Streamlining the process of identifying and deploying domain experts based on the problem at hand, paving the way for more autonomous and intelligent systems.

- Ease of Implementation: Leveraging libraries like Autogen simplifies the implementation, inviting developers and enthusiasts to explore and extend the framework. The provided code is not merely a proof of concept but a platform for community engagement and innovation.

- Bridging Human and Machine Collaboration: The framework serves as a stride towards intertwining human collaborative ingenuity with machine precision, enriching the AI's capability to understand and deliberate over queries.

- Engagement and Exploration: Delve into the implementation, experiment with the "Think Tank" setup, and envision its integration into your projects or domains.

The journey through Generative AI is exhilarating, with frameworks like "Think Tank" showcasing a collaborative future. It's an invitation to join a venture where AI moves beyond solitary computation to a collaborative environment, ready to tackle the diverse challenges ahead.

Comments ()