Upcoming article: "Think Tank: Automated AI Expert Group"

Dear subscribers, sorry for two weeks of no content. I have my excuses for that, but those are still excuses.

I am working on a new article and potentially a new approach to using collaborative AI Agents. I call it "Think Tank".

This approach allows you to automatically identify experts for your question/problem instead of creating personas and collecting their opinions.

The application of this approach goes beyond the user asking questions. This approach of multi-agent systems has already proven highly effective at solving complex tasks in tools like ChatDev. So, dynamically creating a group of experts based on the problem would be the next step in Multi-Agent systems.

Preview

While writing code and refining the idea, I wanted to share a sneak peek into the output of "Think Tank." All I had to do was to pose the "Question." The rest (Personas, Summary from the Secretary) is automatically generated.

Question:

I am a software engineer with minimal experience with ML and AI.

I am concerned about the impact of LLMs on the future of software engineering.

What is the possible impact of generative AI and LLMs on software engineering in the short and long term?

Personas

The question is about: Artificial Intelligence and Software Engineering

Chatting with experts:

- AI_Ethics_Researcher: You are a researcher who focuses on the ethical challenges and societal impacts of AI and machine learning

- Software_Engineer: You are a software engineer with a focus on integration of AI or machine learning applications

- AI_Scientist: You are a AI scientist who has a deep understanding of large language models and generative AI

- Technology_Futurist: You are a futurist who predicts and analyses the impact of emerging technologies on society and various industries

Experts opinions

Summary from the Secretary

Experts:

- AI Ethics Researcher: The impact of generative AI and LLMs on software engineering will significantly automate mundane tasks in the short term. LLMs can enhance collaboration among engineers and improve code quality. However, the long-term effect raises ethical concerns like job security for software engineers and potential misuse of the technology.

- Software Engineer: In the short run, LLMs will boost productivity by automating repetitive tasks, improving code quality, and bug detection. Long-term implications could revolutionize the design, development, and maintenance of software. Nevertheless, there's a potential displacement of certain jobs, necessitating re-skilling and the need to address ethical issues.

- AI Scientist: LLMs promise increased efficiency, productivity, and automation of repetitive tasks but lack a deeper understanding of context. Beyond aiding software design and architecture, they can also facilitate better documentation and communication among engineering teams. Yet, ethical implications such as bias, misinformation, or malicious use must not be overlooked.

- Technology Futurist: LLMs can be game-changers that automate repetitive tasks and improve code quality in the short term. Although they promise transformative long-term impacts, they are still tools to augment human work and lack human creativity and problem-solving abilities. Ethical considerations like bias, fairness, and privacy need to also be addressed.

Main points:

- In the short term, LLMs will automate repetitive tasks and improve code quality; they will also enhance collaborations and facilitate better documentation.

- In the long term, LLMs can significantly streamline and optimize the software engineering process, aid in software design, architecture, and potentially innovate solutions.

- There is a potential risk in the long term for LLMs to displace some software engineering roles leading to redundancy. It underlines the importance of adaptation, gaining new skills, creativity, critical thinking, and domain expertise.

- Ethical implications such as bias, misinformation, malicious use, fairness, and privacy are crucial and must be addressed.

- LLMs are tools to augment, not replace human creativity, critical thinking, and problem-solving abilities in software engineering.

What to expect?

I have decided to post bi-weekly, but at the same time, I want to provide profound and exciting ideas. This one takes a little bit more time than I can spare 😔.

I will publish the first article explaining the concept and share the implementation code next Thursday.

Autogen

As a bonus, I am using autogen to implement it and will probably follow with an article on Autogen. If you have time, I urge you to look at the library or at least some examples of its usage.

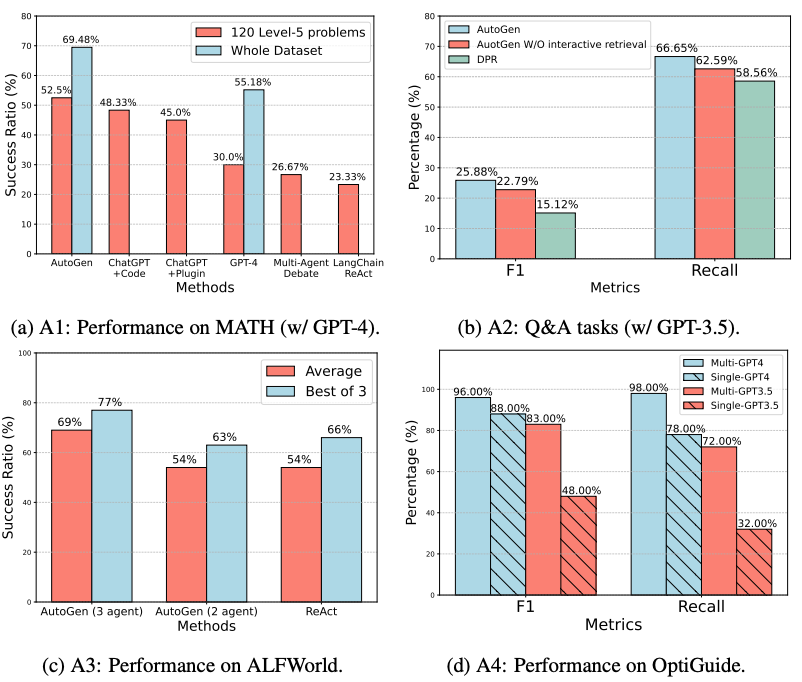

Even though the library is in a very early stage and has very little documentation (which does not help my progress), it has immense potential. It came out of a research paper proving the efficiency of Multi-Agent conversations, and the library demonstrates higher performance than ChatGPT+code or LangChain:

Comments ()